Dr. Sam Gijsen

Machine Learning Researcher

Representation Learning & Multimodal Modeling

for Biological Time Series

I research multimodal representation learning and build foundation models for neural and physiological time series. Previously, I completed a PhD in Computational Cognitive Neuroscience (Freie Universität Berlin) and worked on pharmaco-imaging at King's College London and Maastricht University.

GitHub LinkedIn Google Scholar

Recent Work

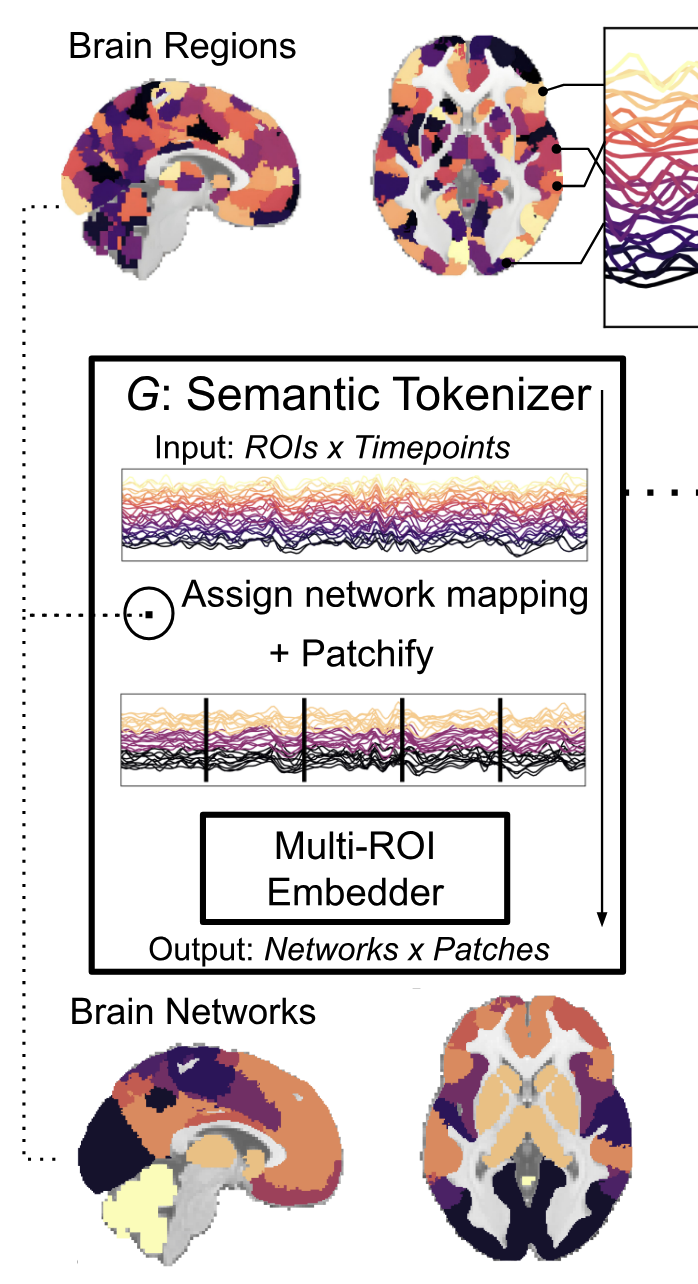

We develop a self-distilled foundation model for brain dynamics that pretrains in 2 hours and eliminates the need for finetuning. We stabilize self-distillation for noisy neural time series through learned tokenization, and find log-linear scaling laws for pretraining data on cross-dataset downstream tasks.

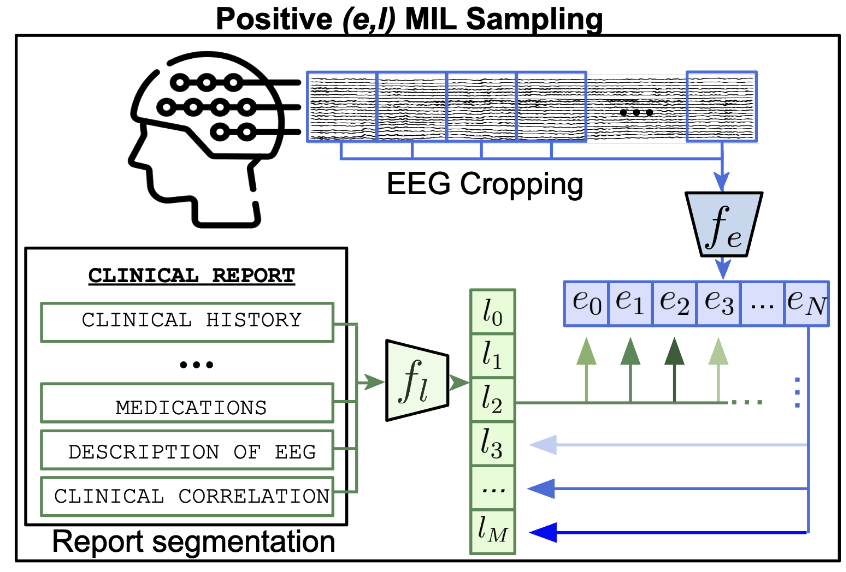

First-of-its-kind EEG-language model for downstream clinical tasks. We show that multimodal models integrating natural language learn more useful representations of neural data.

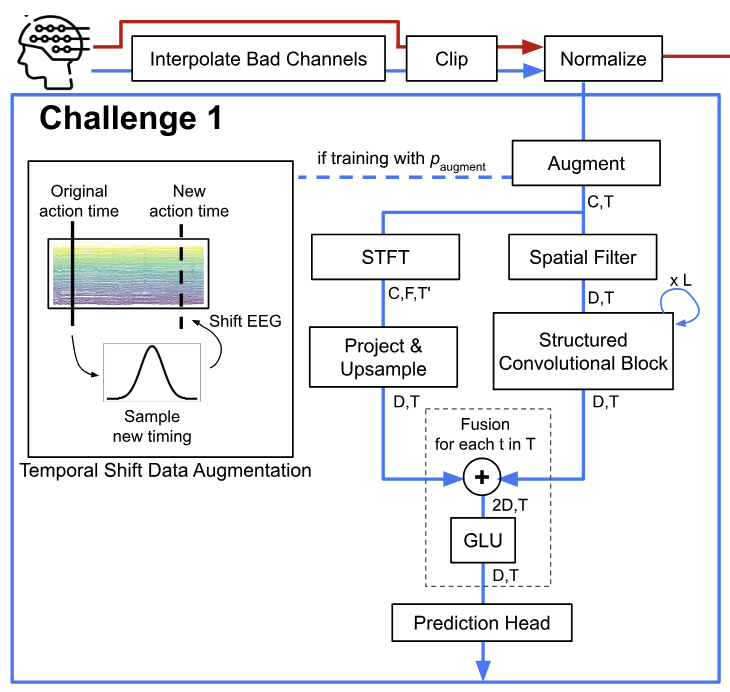

Led a small team that placed highly using a multi-modal fusion architecture designed from scratch, without any pretraining. Code and report to come!

Latest Blog post

Hillsbrad Diffusion: A World Diffusion Model Criminally Undertrained

A qualitative look at a world diffusion model undertrained on two hours of sparse exploration of a large map.

Some Previous Work

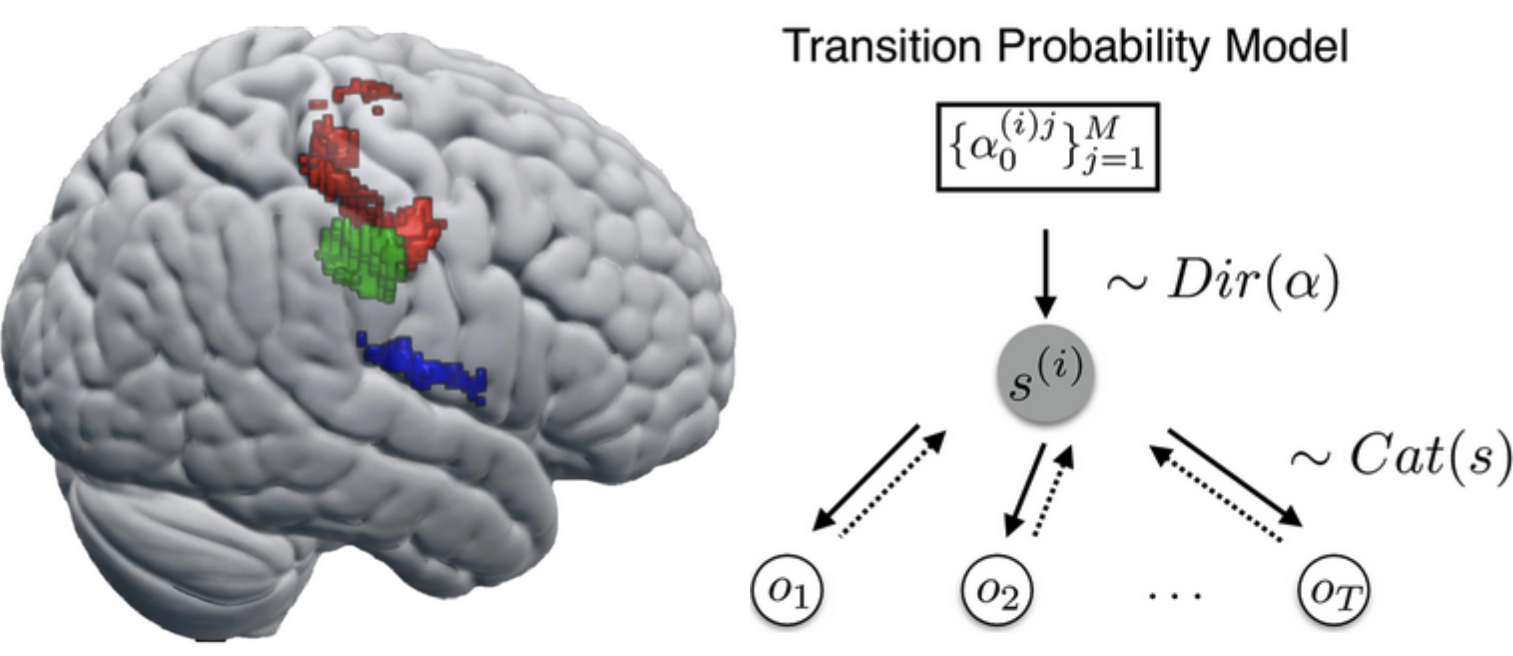

Computational modeling of neural signals using information-theoretic measures shows perceptual learning can be described as a process of probabilistic inference.

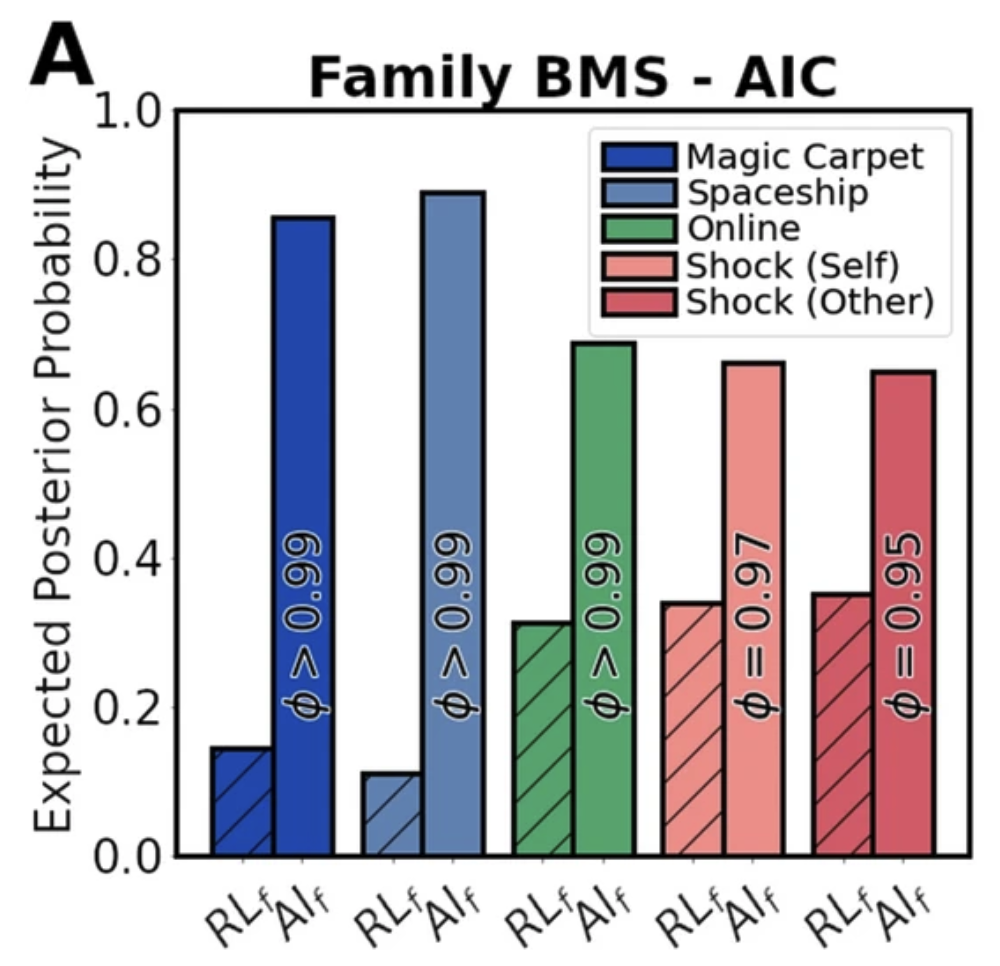

Compared to reinforcement learning, active inference models can better describe human sequential decision-making using probablistic surprise minimization.